fr: NLP, Language & Locality

with f<a+i>r research cohorts & colleagues currently exploring NLP, Language & Locality in Thai Arabic Aramaic Latin American Spanish

with f<a+i>r research cohorts & colleagues currently exploring NLP, Language & Locality in Thai Arabic Aramaic Latin American Spanish

Dr. Marwa Soudi will present a tool she developed together with her research team at Tallinn University, Estonia, that aims to assist companies, particularly small and medium-sized enterprises (SMEs), in evaluating and adopting responsible artificial intelligence (AI) practices.

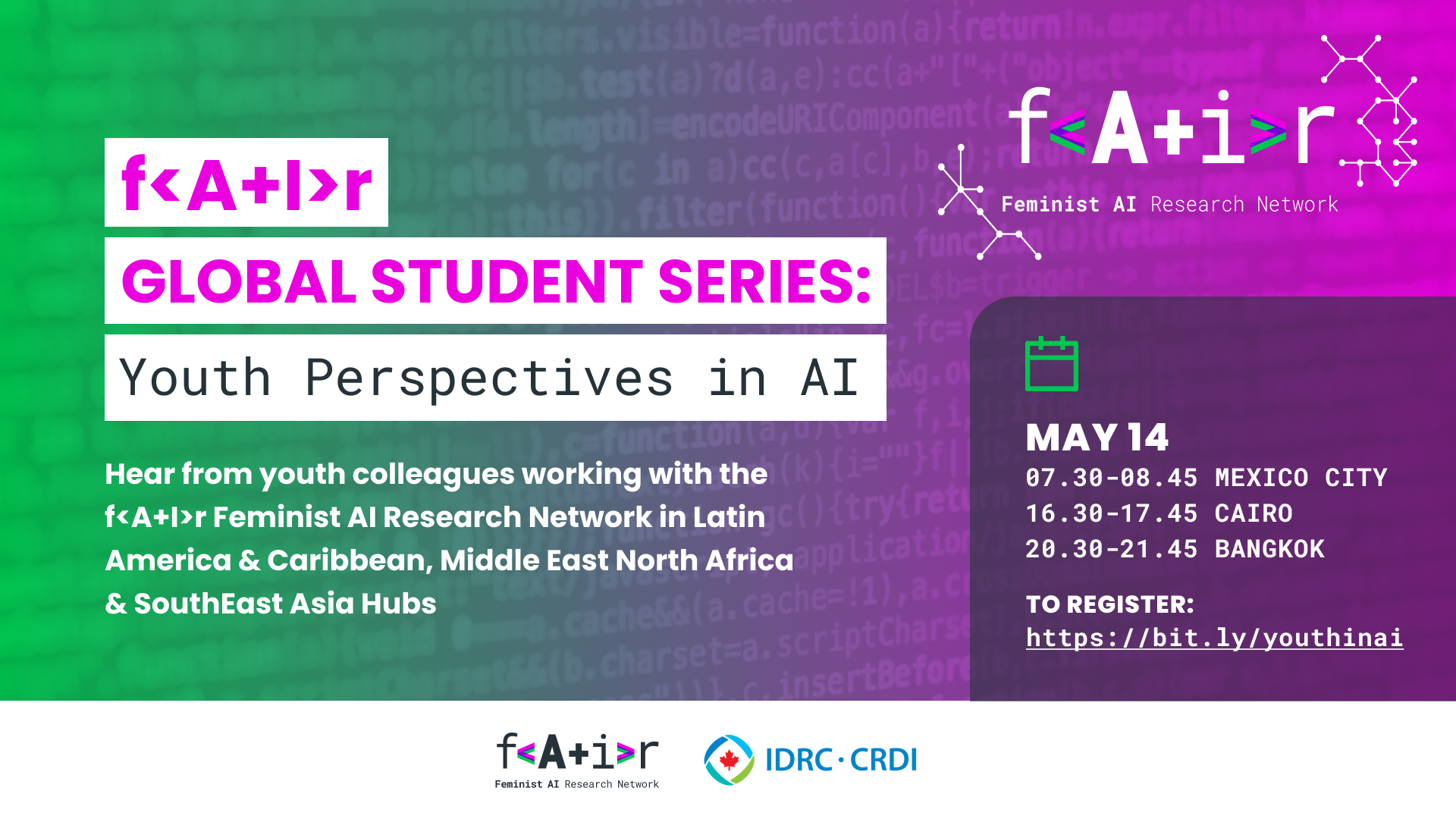

Join us to hear from youth colleagues working with the f<A+I>r Feminist AI Research Network Hubs in Latin America & Caribbean, Middle East & North Africa & SouthEast Asia. 07. […]

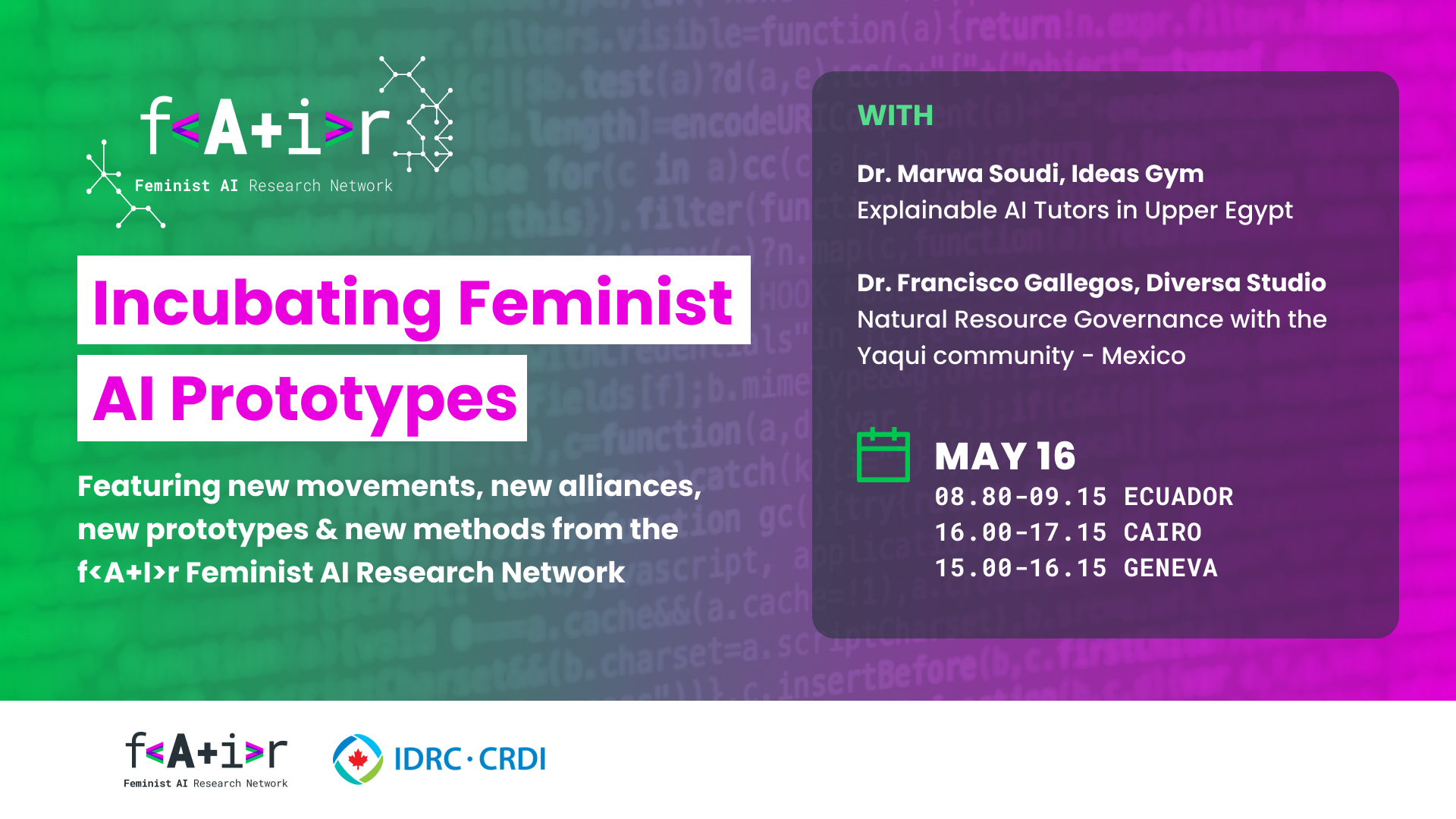

Featuring new movement, new alliances, new prototypes & new methods from the f<a+i>r Feminist AI Research Network: Explainable AI Tutors -Upper Egypt; and Natural Resource Governance with Yaqui -Mexico

Register for the webinar here It gives us great pleasure to invite you to the Access to Knowledge for Development Center (A2K4D)’s upcoming webinar as the Middle East and North Africa (MENA) […]

Human Rights & AI: Inclusive Human Rights Frameworks for New Technologies How can Human Rights Frameworks be the starting point for the creation of new tech & innovation? What questions […]

Bringing together NLP researchers from 4 regions of the world. This webinar is part of our global F<A+I>R Feminist AI Research Network global series in which we collectively think about […]

Ce webinar en français focalisera sur l'IA Francophone.

Francophone AI: Qu’est-ce que c’est?

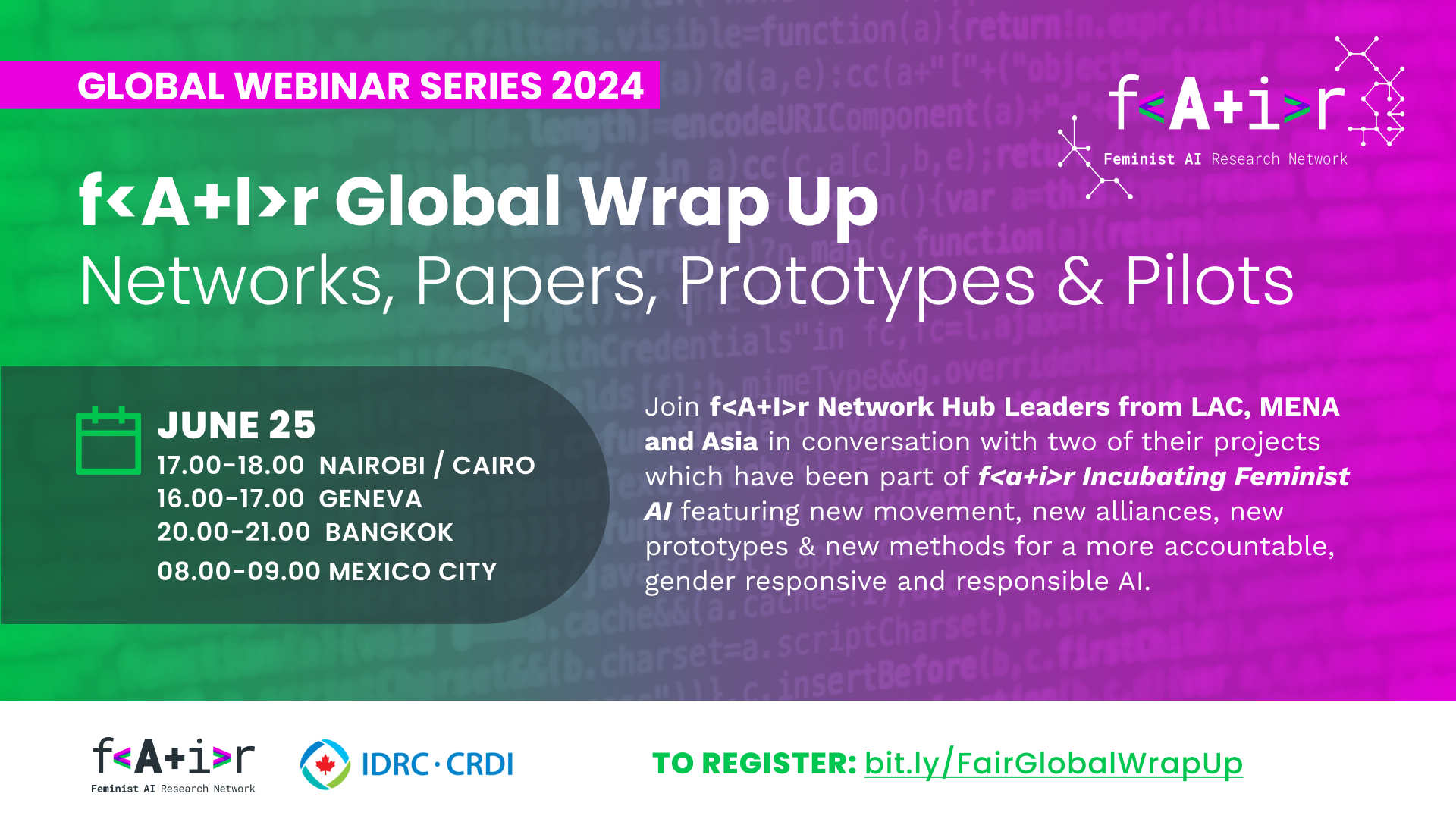

In this webinar, we will be wrapping up the F<A+I>R Feminist AI Research Network of projects with the various members of our cohorts sharing on the journey of bringing their […]

In this open studio, Daniela Moyano will speak about Sof+IA: a prototype chatbot designed to report and provide guidance on Digital Gender Violence (DGV) in Chile. This evaluation examines its development through Data Feminism principles, highlighting how it challenges power structures, prioritizes survivor needs, and integrates emotion into technology design.

It is a well-known fact that LLMs express harmful biases in their predictions. The main source comes from the training datasets, which are too large and expensive to check thoroughly. In this open studio, Francesca Lucchini will explore how we can leverage the bias in LLMs and use them to examine massive datasets, discovering starting points for a data audit.